Big Data technology has been one of key engines driving the new industrial revolution. However, the majority of current Big Data research efforts have been devoted to single-modal data analysis, which leads to a huge gap in performance when algorithms are carried out separately. Although significant progress has been made, single-modal data is often insufficient to derive accurate and robust models in many applications. Multimodal is the most general form for information representation and delivery in a real world. Multimodal data analytics algorithms often outperform single modal data analytics in many real-world problems. With the rapid development of Big Data technology and its remarkable applications to many fields, multimodal Big Data is a timely topic. This workshop aims to generate momentum around this topic of growing interest, and to encourage interdisciplinary interaction and collaboration between Natural Language Processing (NLP), computer vision, audio processing, machine learning, multimedia, robotics, Human-Computer Interaction (HCI), cloud computing, Internet of Things (IoT), and geospatial computing communities. It serves as a forum to bring together active researchers and practitioners from academia and industry to share their recent advances in this promising area.

MMAI 2023 Final Accepted Papers & Program Schedule

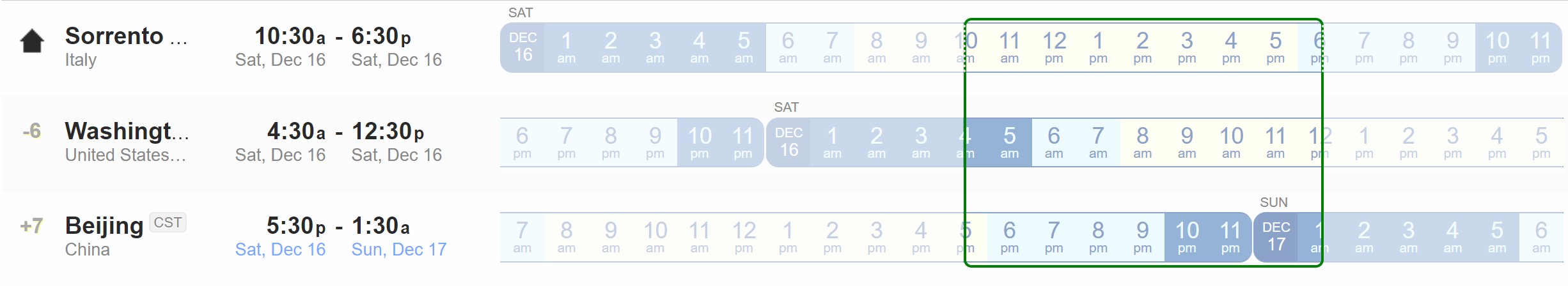

Dec. 16, 2023, Italy (GMT+1)

Virtually: Please join IEEE Big Data Workshop - MMAI 2023 using the link that was sent to you.

Physically: Hilton Sorrento Palace, Conference Room - Nettuno 3

| Type | Page | Paper Title | Author(s) |

| Session I (Italy Time 10:30-12:30) | |||

| Opening Remarks | |||

| Full | 10 | LoRA-like Calibration for Multimodal Deception Detection using ATSFace Data | Shun-Wen Hsiao and Cheng-Yuan Sun |

| Full | 10 | Multimodal Large Language Models: A Survey | Jiayang Wu, Wensheng Gan, Zefeng Chen, Shicheng Wan, and Philip S. Yu |

| Short | 6 | Semantic Prompt Based Multi-Scale Transformer for Few-Shot Classification | Hongwu Liu, Shouhong Wan, Peiquan Jin, and Xin Wang |

| Short | 8 | Impact of Mixed Multimodalities and Size Dependence on Performance of Object Detection on Multimodal Satellite Imagery | Yuri Gordienko, Nikita Gordienko, Oleksandr Rokovyi, Oleg Alienin, Andrii Polukhin, and Sergii Stirenko |

| Extended Abstract | 5 | Neural Crystals | Sofia Karamintziou, Thanassis Mavropoulos, Dimos Ntioudis, Georgios Meditskos, Stefanos Vrochidis, and Ioannis (Yiannis) Kompatsiaris |

| Poster | 4 | CLIP-PubOp: A CLIP-based Multimodal Representation Fusion Method for Public Opinion | Zhibo Wang, Yi Guo, and Jiaojiao Fu |

| Poster | 4 | Audio-visual Neural Face Generation with Emotional Stimuli | Juheon Hwang and Jiwoo Kang |

| Lunch Break | |||

| Session II (Italy Time 14:00-16:00) | |||

| Short | 8 | De-SaTE: Denoising Self-attention Transformer Encoders for Li-ion Battery Health Prognostics | Gaurav Shinde, Rohan Mohapatra, Pooja Krishan, and Saptarshi Sengupta |

| Short | 6 | Multimodal One-class Learning for Malicious Online Content Detection | Roberto Corizzo, Nora Lewis, Lucas P. Damasceno, Allison Shafer, Charles C. Cavalcante, and Zois Boukouvalas |

| Full | 10 | Exploiting Multiple Sequence Lengths in Fast End to End Training for Image Captioning | Jia Cheng Hu, Roberto Cavicchioli, and Alessandro Capotondi |

| Short | 8 | Evaluating CLIP: Understanding on Relationships in a Blocks World | Kairui Zhang and Martha Lewis |

| Short | 8 | New Finger Photo Databases with Presentation Attacks and Demographics | Anudeep Vurity and Emanuela Marasco |

| short | 8 | Understanding the Language of ADHD and Autism Communities on Social Media | Niloofar Kalantari, Amirreza Payandeh, Marcos Zampieri, and Vivian Genaro Motti |

| Poster | 4 | Gender Classification Accuracy via Two-Dimensional Body Joints using Convolutional Neural Networks | Cheng-En Sung and Nada Attar |

| Coffee Break | |||

| Session III (Italy Time 16:00-18:00) | |||

| Extended Abstract | 5 | Late Fusion-based Distributed Multimodal Learning | Flavio Giobergia and Elena Baralis |

| Poster | 4 | A Supervised Autoencoder for Human Activity Recognition with Inertial Sensors | Jaehyeok An, Younghoon Kwon, and Yoon-Sik Cho |

| Extended Abstract | 5 | Using the CARLA Simulator to Train A Deep Q Self-Driving Car to Control a Real-World Counterpart on A College Campus | Joseph May, Khem Poudel, Samir Poudel, Sammi Hamdan, and Jorge Vargas |

| Full | 10 | Enhancing Scientific Image Classification through Multimodal Learning: Insights from Chest X-Ray and Atomic Force Microscopy Datasets | David Meshnick, Nahal Shahini, Debargha Ganguly, Yinghui Wu, Roger French, and Vipin Chaudhary |

| Short | 6 | Character-based Outfit Generation with Vision-augmented Style Extraction via LLMs | Najmeh Forouzandehmehr, Yijie Cao, Nikhil Thakurdesai, Ramin Giahi, Luyi Ma, Nima Farrokhsiar, Jianpeng Xu, Evren Korpeoglu, and Kannan Achan |

| Short | 8 | Predicting Potential School Shooters from Social Media Posts | Alana Cedeno, Rachel Liang, and Sheikh Rabiul Islam |

| Closing Remarks | |||

*The program schedule is subject to change.